IHR Note: We are proud to present this second article in the fifth annual volume of the International HETL Review (IHR) with the academic article contributed to the February issue of IHR by Drs. Valerie Storey, Mickey Caskey, Kristina Hesbol, James Marshall, Bryan Maughan and Amy Dolan. In this action research study, the authors, members of the Carnegie Project on the Education Doctorate (CPED) Dissertation in Practice Awards Committee have examined the format and design of dissertations submitted as a part of the reform of the educational doctorate. Twenty-five dissertations submitted as part of this project were examined through surveys, interviews and analysis to determine if the dissertations had changed as a result of the project and re-design with the participating programs. Their results raise questions about distinctiveness of Educational and professional doctorates, as compared to PhDs and the criteria to “demonstrate new knowledge” in the dissertation process.

Bios

Dr. Valerie A. Storey is an Associate Professor in the Department of Educational Leadership, School of Teaching, Learning and Leadership in the College of Education and Human Performance at the University of Central Florida where she is the Director of the EdD Executive Educational Leadership, and the EdD Education programs. She graduated with a Ph.D. in educational leadership and policy studies from Vanderbilt University. Dr. Storey can be contacted at [email protected]

Dr. Valerie A. Storey is an Associate Professor in the Department of Educational Leadership, School of Teaching, Learning and Leadership in the College of Education and Human Performance at the University of Central Florida where she is the Director of the EdD Executive Educational Leadership, and the EdD Education programs. She graduated with a Ph.D. in educational leadership and policy studies from Vanderbilt University. Dr. Storey can be contacted at [email protected]

Dr. Mickey M. Caskey is Associate Dean of the Graduate School of Education and a Professor in the Department of Curriculum and Instruction at Portland State University. She draws on more than 20 years of teaching inner-city adolescents in middle and high schools. Her areas of specialization include middle grades education, content area literacy, action research, strategic instruction, and teacher education. Dr. Caskey can be contacted at [email protected].

Dr. Mickey M. Caskey is Associate Dean of the Graduate School of Education and a Professor in the Department of Curriculum and Instruction at Portland State University. She draws on more than 20 years of teaching inner-city adolescents in middle and high schools. Her areas of specialization include middle grades education, content area literacy, action research, strategic instruction, and teacher education. Dr. Caskey can be contacted at [email protected].

Dr. Kristina A. Hesbol is an Assistant Clinical Professor and Program in the Educational Leadership and Policy Studies Department at the University of Denver, where she teaches graduate leadership courses. Dr. Hesbol earned a Ph.D. in Educational Leadership and Policy Analysis (Loyola University, Chicago), and holds current principal and superintendent endorsements. Dr. Hesbol can be contacted at [email protected]

Dr. Kristina A. Hesbol is an Assistant Clinical Professor and Program in the Educational Leadership and Policy Studies Department at the University of Denver, where she teaches graduate leadership courses. Dr. Hesbol earned a Ph.D. in Educational Leadership and Policy Analysis (Loyola University, Chicago), and holds current principal and superintendent endorsements. Dr. Hesbol can be contacted at [email protected]

Dr. James E. Marshall is Associate Dean of the Kremen School of Education and Human Development at California State University, Fresno where he also serves as Director of the Doctoral Program in Educational Leadership. He received his Ph.D. in Curriculum and Instruction from University of South Florida. Dr. Marshall can be contacted at [email protected]

Dr. James E. Marshall is Associate Dean of the Kremen School of Education and Human Development at California State University, Fresno where he also serves as Director of the Doctoral Program in Educational Leadership. He received his Ph.D. in Curriculum and Instruction from University of South Florida. Dr. Marshall can be contacted at [email protected]

Dr. Bryan D. Maughan is Coordinator of the Professional Practices Doctoral program in the Department of Leadership & Counseling at the University of Idaho. He graduated with a Ph.D. in Adult Organizational Leadership & Learning from the University of Idaho. Dr. Maughan can be contacted at [email protected]

Dr. Bryan D. Maughan is Coordinator of the Professional Practices Doctoral program in the Department of Leadership & Counseling at the University of Idaho. He graduated with a Ph.D. in Adult Organizational Leadership & Learning from the University of Idaho. Dr. Maughan can be contacted at [email protected]

Dr. Amy Wells Dolan is Associate Dean of the School of Education and Associate Professor at the University of Mississippi where she is the Principal Investigator for the Carnegie Project on the Education Doctorate initiative. She earned a Ph.D. in Higher Education from the University of Kentucky. Dr. Dolan can be contacted at [email protected]

Dr. Amy Wells Dolan is Associate Dean of the School of Education and Associate Professor at the University of Mississippi where she is the Principal Investigator for the Carnegie Project on the Education Doctorate initiative. She earned a Ph.D. in Higher Education from the University of Kentucky. Dr. Dolan can be contacted at [email protected]

Examining EdD Dissertations in Practice: The Carnegie Project on the Education Doctorate

Valerie A. Storey

University of Central Florida, U.S.A.

Micki M. Caskey

Portland State University, U.S.A.

Kristina A. Hesbol

University of Denver, U.S.A.

James E. Marshall

California State University, Fresno, U.S.A.

Bryan Maughan

University of Idaho, U.S.A.

Amy Wells Dolan

University of Mississippi, U.S.A.

Abstract

In 2007, 25 colleges and schools of education (Phase I) came together under the aegis of the Carnegie Project on the Education Doctorate (CPED) to transform doctoral education for education practitioners. A challenging aspect of the reform of the educational doctorate is the role and design of the dissertation or Dissertation in Practice. In response to consortium concerns, members of the CPED Dissertation in Practice Awards Committee conducted this action research study to examine the format and design of Dissertations in Practice submitted by (re) designed programs. Data were gathered with an online survey, interviews, analyses of 25 Dissertations in Practice submitted in 2013 to the Committee. Results indicated few changes occurred in the final product, despite evidence of change in the Dissertation in Practice process. Findings contribute to debates about the distinctive nature of EdDs (and of professional doctorates generally) as distinct from PhDs, and how about the key criteria for demonstrating “new knowledge to solve significant problems of practice” are demonstrated through the dissertation submission.

Keywords: Dissertation in Practice, Professional Doctorate, Doctoral Thesis, Education Doctorate

Introduction

During the past decade, epistemological and philosophical debates have surrounded the EdD (Caboni & Proper, 2009; Guthrie, 2009; Shulman 2005, 2007; Zambo, 2011). These debates focus on the source, depth, and type of knowledge doctoral students need to become reflective practitioners and effective school leaders (Andrews & Grogan 2005; Evans 2007; Shulman 2005, 2007; Shulman, Golde, Bueschel, & Garabedian, 2006), and the different roles of the EdD (Doctor of Education) and PhD (Doctor of Philosophy) programs failing in delivering these outcomes (Caboni & Proper, 2009; Guthrie, 2009). Some postulated that the programs were indistinguishable in some higher education institutions (Guthrie, 2009; Shulman 2005, 2007; Shulman et al., 2006). Levine (2005) observed that the EdD lacked its own identity, failing to prepare school leaders who understand real school problems with the ability to take action and make effective, lasting change. Additionally EdD graduates often fail to impact students and teachers in their schools (Murphy & Vriesenga, 2005), declining to turn theory into practice, change practice, or challenge the status quo (Evans, 2007).

In 2007, institutional members of the Carnegie Project on the Education Doctorate (CPED) came together to re-imagine and redesign the EdD (Perry & Imig, 2008), clearly differentiating the Professional Practice Doctorate (EdD) from the PhD. A major outcome was the culminating EdD experience, validating the scholarly practitioner’s ability to solve Problems of Practice, and demonstrating the doctoral candidate’s ability “to think, to perform, and to act with integrity” (Shulman, 2005, p. 52).

In this article, we first set the study context, illustrating the epistemological and philosophical debates relating to the EdD, focusing on Dissertations in Practice (DiPs). Next, we discuss the developing design of DiPs, reflecting new models of educational research that emerge from Problems of Practice (PoPs). Finally, we report an action research study in which we investigated exemplar DiPs, nominated by 54 Phase I and II institutions, for the annual Dissertation in Practice Award. The purpose of the study was to generate valuable insights about the nature of professional practice doctorate dissertations.

Background

The Association of American Colleges and Universities define the EdD as a terminal degree, presented as an opportunity to prepare for academic, administrative, or specialized positions in education. The degree favorably places graduates for leadership responsibilities or executive-level professional positions across the education industry (National Science Foundation, 2011). At most academic institutions where education doctorates are offered, the college or university chooses to offer an EdD, a PhD, or both (Osguthorpe & Wong, 1993). However, Shulman et al. (2006) contended that EdD and PhD programs are not aligned with their distinct theoretical purposes, and that poorly structured programs, marked by confusion of purpose, caused the EdD to be viewed as “PhD Light,” rather than a separate degree for a separate profession (p. 26).

Expanding Role of Influence

CPED encourages Schools of Education to reclaim the education doctorate (Shulman et al., 2006; Perry & Imig, 2008; Walker, Golde, Jones, Bueschel, & Hutchings, 2008) by developing EdD programs with scholarly practitioner graduates. The program design includes a set of courses, socialization experiences, and emphases that are distinct from those conventionally offered in PhD programs (Caboni & Proper, 2009; Guthrie, 2009). Bi-annual, three-day CPED convenings include graduate students, college deans, clinical faculty, teachers, college professors, and school administrators from member institutions. The first convening in Palo Alto, CA (June 2007), attended by 25 invited institutions, set the tone for future convenings by orchestrating an exchange of information with colleagues, grounded in a spirit of scholarly generosity, ethical responsibility, and integrity.

CPED Institutions, Phase 1, 2007-2010

| Arizona State University | University of Louisville |

| California State University | University of San Francisco |

| System Duquesne University | University of Southern California |

| Lynn University | University of Vermont |

| Northern Illinois University | University of Oklahoma |

| Pennsylvania State University | University of Nebraska-Lincoln |

| Rutgers University | University of Missouri-Columbia |

| University of Central Florida | University of Maryland |

| University of Connecticut | Virginia Commonwealth University |

| University of Florida | Virginia Tech University |

| University of Houston | Washington State University |

| University of Kansas |

A second group of institutions responded to a call for CPED membership in 2010. The call, open members of the Council for Academic Deans of Research Education Institutions (CADREI), included institutional commitments outlined in a Memorandum of Understanding. Identified as Phase II institutions, 26 new universities joined the consortium, beginning their work of EdD re-design at the fall convening held at Burlington, Vermont in 2011.

CPED Institutions, Phase II, 2011-2013

| Baylor University | Texas Tech University |

| Boston College | University of Akron |

| Florida State University | University of Alabama |

| Fordham University | University of Alaska Anchorage |

| Illinois State University | University of Arkansas |

| Indiana University | University of Dayton |

| Kansas State University | University of Hawaii |

| Kent State University | University of Idaho |

| North Carolina State University | University of Massachusetts Amherst |

| North Dakota State University | University of Mississippi |

| New York University Steinhardt | University of Missouri-St. Louis |

| Portland State University | University of Pittsburgh |

| Texas Southern University | University of San Francisco |

An ongoing discussion has centered on the nature of the final capstone of CPED influenced programs which Hamilton et al., (2010) suggest helps invigorate the use of a traditionalacademic tool. Many Phase 1 institutional members are farther into their programmatic implementation, with cohorts who have graduated and completed a DiP. Still, iterative questions abound among CPED institutions regarding the nature, scope, impact, and format of the DiP (Sands et al., 2013), as institutions learn from graduating cohorts (Harris, 2011).

CPED Institutions, Phase III, 2014

| Brigham Young University | Mills College |

| California State University System | Montana State University |

| – Bakersfield | Northeastern University |

| – Los Angeles | Northern Kentucky University |

| – Stanislaus | Nova Southeastern University |

| – San Jóse State | Regis College (MA) |

| Kansas State University | Salisbury University |

| Fielding Graduate University | Seattle University |

| Florida A&M University | Tennessee State University |

| Frostburg State University | Texas A&M University |

| Georgia Regents University | Texas A&M University-Corpus Christi |

| Georgia Southern University | The George Washington University |

| High Point University | University of Auckland (New Zealand) |

| Johnson & Wales University | University of Denver |

| Kennesaw State University | University of Georgia |

| Loyola Marymount University | University of New Mexico |

| Miami University | University of North Texas |

| Michigan State University | University of Toronto (Canada) |

In April 2014, the consortium’s membership increased to 84, including two universities from Canada and one from New Zealand.CPED’s commitment to support institutional flexibility in the DiP design presents difficulty sorting out issues of rigor, and advancing common understandings about the nature of problems of practice (Sands et al., 2013). An informal survey of current CPED institutions (CPED, 2013) identified culminating projects including white papers, articles for publication, monographs, electronic portfolios, and the traditional five chapter dissertation document.

Not surprisingly, the consortium has struggled to reach consensus on a DiP definition. Several drafts have been distributed on the consortium’s web site inviting feedback and comment. The current version is, “The Dissertation in Practice is a scholarly endeavor that impacts a complex problem of practice” (CPED, 2014). What is agreed upon by the consortium is that the DiP is focused on practice, and that local context matters. Faculty in EdD programs must have a clear sense of the nature of problems in practice among their constituent base, appropriate types of inquiry used to address those issues, and the manner in which results can be conveyed in authentic, productive ways (Sands et al., 2013).

Key Principles and Components of an Innovative DiP

The nature and format of the DiP diverge (Archbald, 2008; CPED, 2012; Sands et al., 2013). The first major discussion about the attributes of the CPED DiP occurred at the second convening (Fall, 2007), at Vanderbilt University (Storey & Hartwick, 2010). Peabody College faculty and recent program graduates described their DiP’s client-based process. Faculty expressed that the DiP’s primary objective is to provide a program candidate with an opportunity to show they are informed and have the critical skills and knowledge to address complex educational problems (Smrekar & McGraner, 2009). They indicated that the EdD candidate could exemplify a skill set including deep knowledge and understanding of inquiry, organizational theory, resource deployment, leadership studies, and the broad social context associated with problems of educational policy and practice (Caboni & Proper, 2009). Faculty asserted that while DiPs may vary by focus area, geographical location, institutions (school, district, agency, association), and scope (case study, systematic review, program assessment, program proposal), all share common characteristics related to rigorous analysis in a realistic operational context (Smrekar & McGraner, 2009). In the convening’s keynote speech, Guthrie (2009) argued that if capstone requirements for research and practice are the same in EdD and PhD programs, then program purposes, research preparation, and practitioner professional training have been woefully compromised.

During the Fall 2012 convening, consortium members tackled the development of a set of standards and criteria to assess the DiP. Questions regarding the requirements of DiP remained, however. In response to a proposed standard that the DiP “is expected to have generative impact on the future work and agendas of the scholar practitioner” (CPED, 2012), members asked, “What is meant by generative impact? Is this doable in a dissertation capstone?” Members wondered if APA was the appropriate stylistic guide for the formatting of final products, and whether blogs, websites, graphic novel, or YouTube videos were appropriate products (Sands et al., 2013).

Participants at the 2009 convening developed six Working Principles to guide the consortium’s work (Perry & Imig, 2010):

The professional doctorate in education:

- Is framed around questions of equity, ethics, and social justice to bring about solutions to complex problems of practice.

- Prepares leaders who can construct and apply knowledge to make a positive difference in the lives of individuals, families, organizations, and communities.

- Provides opportunities for candidates to develop and demonstrate collaboration and communication skills to work with diverse communities and to build partnerships.

- Provides field-based opportunities to analyze problems of practice and use multiple frames to develop meaningful solutions.

- Is grounded in and develops a professional knowledge base that integrates both practical and research knowledge, that links theory with systemic and systematic inquiry.

- Emphasizes the generation, transformation, and use of professional knowledge and practice.

These principles guide institutions as they develop the DiP’s conceptual foundation. Scholarly practitioners blend practical wisdom with professional skills and knowledge to name, frame, and solve problems of practice. They disseminate work in multiple ways, with an obligation to resolve problems of practice by collaborating with key stakeholders, including the partners from schools, community, and the university. The second CPED principle, inquiry as practice, poses significant questions focused on complex problems of practice. By using various research, theories, and professional wisdom, scholarly practitioners design innovative solutions to improve problems of practice. Inquiry of Practice requires the ability to gather, organize, judge, aggregate, and analyze situations, literature, and data with a critical lens (Sands et al., 2013). The final CPED principle relates directly to the DiP as the culminating experience that demonstrates the scholarly practitioner’s ability to solve problems of practice and exhibit the doctoral candidate’s ability “to think, to perform, and to act with integrity” (Shulman, 2005, p. 5).

In 2012, CPED formed a DiP Award Committee to develop assessment criteria for DiPs nominated for the CPED DiP of the Year Award, and to review submitted DiPs for the award. To develop the assessment criteria, the committee drew on Archbald’s (2008) work, which specified four qualities that a reimagined EdD doctoral thesis should address: (a) developmental efficacy, (b) community benefit, (c) stewardship of doctoral values, and (d) distinctiveness of design. In arguing for a problem solving study, Archbald advised that unlike a research dissertation, findings are not the goal. Rather, the problem-based thesis’ goals are decisions, changed practices, and better organizational performances.

At the June 2012 convening, hosted by California State University (Fresno), the DiP committee guided members in a Critical Friends activity, “Defining Criteria for a Dissertation in Practice”. Subsequently, the 2012 DiP Committee developed and circulated the draft criteria, inviting feedback from CPED members.

At the October 2012 convening, hosted by at The College of William and Mary, the DiP Award Committee proposed their assessment criteria and requested additional feedback from CPED colleagues (CPED, 2013). The assessment rubric was revised, responsive to the feedback, and was circulated to a wider consortium membership for public comment on CPED’s website. Review of this feedback led to item criteria refinement along with performance indicators:

- Demonstrates an understanding of, and possible solution to, the problem of practice. (Indicators: Demonstrates an ability to address and/or resolve a problem of practice and/or generate new practices.)

- Demonstrates the scholarly practitioner’s ability to act ethically and with integrity. (Indicators: Findings, conclusions and recommendations align with the data.)

- Demonstrates the scholarly practitioner’s ability to communicate effectively in writing to an appropriate audience in a way that addresses scholarly practice. (Indicators: Style is appropriate for the intended audience.)

- Integrates both theory and practice to advance practical knowledge. (Indicators: Integrates practical and research-based knowledge to contribute to practical knowledge base; Frames the study in existing research on both theory and practice.)

- Provides evidence of the potential for impact on practice, policy, and/or future research in the field. (Indicators: Dissertation indicates how its findings are expected to impact professional field or problem.)

- Uses methods of inquiry that are appropriate to the problem of practice. (Indicators: Identifies rationale for method of inquiry that is appropriate to the dissertation in practice; effectively uses method of inquiry to address problem of practice.)

The DiP Award Committee conducted two rounds of review for the DiP Annual Award, applying the above assessment criteria.

What Makes a Professional Practice DiP?

In this section, we turn to the international community for guidance in answering two major issues concerning the CPED Award Committee as they wrestled with the assessment criteria. First, what should a DiP look like? Second, how should DiP potential impact be measured?

Numerous national and international bodies govern qualifications and specifications for what doctoral level work should look like, e.g., European University Association (2005), Council of Deans and Directors of Graduate Studies, Australia (2007), Council of Graduate Schools (2008), Quality Assurance Agency (2012). Common to all is the emphasis on critical assessment of the originality of findings presented in the dissertation in the context of the literature and the research. Fulton, Kuit, Sanders and Smith (2013) drew on their experience teaching in a Professional Practice Doctoral program at the University of Sunderland in England, concluding that the “ability to design research objectively and logically, and then to critically review and evaluate findings, is what makes it doctoral level, not the actual findings themselves” (p.152). In their view, the difference between a PhD and a Professional Practice Doctorate is the demonstration of knowledge production that makes a significant contribution to the profession. O’Mullane (2005) noted that while the structure of a DiP may be similar to that of a PhD dissertation, it should contain additional reflective elements relating to personal reflections on the learning journey. But the question remains, what should a DiP look like? O’Mullane (2005) identified six outputs currently used by universities to demonstrate a significant contribution to the profession:

- Thesis or dissertation alone;

- Portfolio and/or professional practice and analysis;

- A reflection and analysis of a significant contribution to knowledge over time or from one major work;

- Published scholarly works recognized as a significant and original contribution to knowledge;

- Portfolio and presentation (performance in music, visual arts, drama); and

- Professional practice and internship with mentors.

These six DiP designs can be found within CPED; a group DiP design is also being explored. Universities are offering several DiP design choices: (a) Baylor University’s DiP can be thematic, assessment, action research, or three articles; (b) California State University San Marcos’ DiP can be a policy brief, executive summary, or series of articles; (c) Rutgers University’s DiP can be thematic, assessment, three article, action research, portfolio, or 3 “products” tied together with an introduction and conclusion; and (d) the University of Arkansas’ DiP can be an executive summary and article submission for publication in a peer reviewed scholarly journal (CPED data, 2013). O’Mullane (2005) also identified the essentials of a DiP:

- Create new knowledge.

- Make a significant contribution to your profession.

- Explicit conceptual framework.

- Literature review should provide the context to the research question, and should demonstrate that the question is worth asking.

- Demonstrable evidence of how ideas have been synthesized in the light of experience and in the context of academic literature, and how this has created new knowledge.

- Demonstration that findings have been reflected on, logically planned, and progressed through the research.

- Independently construct arguments for and against the findings and use evidence to support your interpretation.

- A distinctive voice should be clearly heard although what is said should be supported by evidence.

- Use the university’s designated reference style consistently. (pp.149-150)

Fulton et al. (2013) suggested that “the creation of new knowledge and significant contribution” are critical, and likely to give any DiP assessor the most difficulty. Not only does “the creation of new knowledge and significant contribution” vary between professions, but the opportunity to influence a profession also tends to be based on position and length of service. To bring clarity to the problem of “significant contribution,” O’Mullane (2005) suggested two classifications, active or inactive, in terms of contribution to the profession. An active contribution generates new significant knowledge, which results in significant improvement in practice. An inactive contribution generates significant knowledge that has not yet been disseminated.

Current Rhetoric and Reality of DiPs: An Action Research StudyMethods of the Study

For this action research study (Lewin, 1944; Stringer, 2007), we gathered data from an online survey from the eight member DiP Award Committee. Members came from a variety of institutions; four had previous Dissertation Award Committee experience with American Education Research Association special interest groups. The authors of this paper were among those who provided data.

Instrument

Quantitative and qualitative data were gathered using a Qualtrics administered survey with Likert responses and assessors’ comments. Each survey item was scored 1 to 4, with 1 indicating “unacceptable,” 2 “developing,” 3 “target,” and 4 “exceptional”.

Procedure

Each member of the committee responded to an email invitation to complete a blind review of four DiP synopses submitted by the nominated candidate. Two committee members assessed each synopsis against the assessment item criteria, with a third assessment by the committee chair, as needed. Based on the quantitative scores and qualitative comments of the synopses, the pool was narrowed from 25 to 6 DiPs. A second blind review of the full text of the six DiPs was conducted with each committee member reading the full DiP and submitting criteria assessment data in Qualtrics.

Limitations

The authors of this paper are DiP Award Committee members, which could cause bias in interpretation. The committee members’ initial judgments were based on the submitted synopses; some may not have adequately represented the overall DiPs quality. The sample was neither random nor sufficiently large to draw generalizable conclusions. 14 DiPs came from three Phase 1 institutions. While not surprising that most submissions came from Phase 1 institutions, multiple submissions from any institution was unexpected.

Data Analysis

Descriptive statistics were calculated for each DiP synopsis assessed on the six CPED assessment items (Table 1). Item means ranged from 2.78 to 2.94 with an overall mean of 2.86. The median was 3 (“Target”) for each of the six items and the mode was 3 (“Target”) for all items except item #5, where the mode was 2.

Table 1. Item Statistics for the DiP Award Assessment Survey

| DiP | Award Assessment Item | Mean | SD |

| 1. | Demonstrates an understanding of, and possible solution to, the problem of practice. | 2.82 | 0.720 |

| 2. | Demonstrates the scholarly practitioner’s ability to act ethically and with integrity. | 2.90 | 0.580 |

| 3. | Demonstrates the scholarly practitioner’s ability to communicate effectively in writing to an appropriate audience in a way that addresses scholarly practice. | 2.94 | 0.793 |

| 4. | Integrates both theory and practice to advance practical knowledge | 2.78 | 0.679 |

| 5. | Provides evidence of the potential for impact on practice, policy, and/or future research in the field. | 2.86 | 0.808 |

| 6. | Uses methods of inquiry that are appropriate to the problem of practice | 2.86 | 0.639 |

Across the range of 300 individual responses (2 reviewers x 25 dissertations x 6 survey items), a 1 (Unacceptable) was selected only four times, while 4 (Exceptional) was selected 50 times. The remaining 246 responses were either a 2 (Developing) or 3 (Target), indicating considerable restriction of range at both ends of the scale. As for measures of central tendency, the median of 3 (Target), and a grand mean of 2.86, indicate that overall, reviewers found the DiP to be near “Target” based on the review criteria.

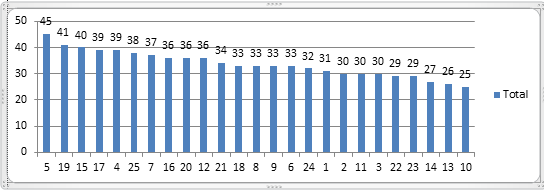

Figure 1 shows a frequency distribution of total scores for the 25 DiPs submitted for review. The numbers on the X-axis represent a unique identifier for the 25 reviewed DiPs. The scale ranged from 0-48 possible points (6 items of the survey x 4 maximum points allowed x 2 reviewers). The observed scores ranged from 25 to 45 with no obvious natural breaks in the distribution.

Figure 1. Frequency distribution of scores across 25 DiPs synopses. Prior to scoring, the DiP Award Committee predicted that an analysis of the score distribution might reveal a natural break that could be useful to narrow the pool for further review. Because there were no obvious natural breaks, the committee, after careful review of both the quantitative and the qualitative data, agreed that the top six scoring DiPs would move forward for a full text review.

Results

The format of 24 DiPs was the traditional (five chapter) dissertation, with one non-traditional chapter. All had single authors. Two submissions implemented results of their study and showed immediate impact. The average page length of the 25 DiPs was 212, with a range of 85-377 pages. Four studies used quantitative methods, 17 used qualitative methods, and four used mixed methods. The methodology used in 10 studies was action research, case studies, grounded theory, and phenomenology.

In additional to numerical rating, the DiP Committee members commented on quality and overall alignment with the DiP assessment criteria. For DiPs that received similar, or identical marks, committee members reviewed the reflective comments, re-read the synopses, and continued meetings via Skype, Adobe Connect, or by phone. The inclusion of quality data provided a point of reference to triangulate perspectives regarding the eventual five finalists.

Critical reflections and subsequent comments can often appear somewhat tenuous. Elements of ambiguity may exist in such reviews, and reviewers may be guilty of overgeneralizing. As the process continued, a clear inter-rater agreement (Creswell, 2013) was evident among committee members.

The qualitative data confirmed the quantitative findings. Regarding those dissertations where the mean was closer to the “exceptional” category, some reviewers stated:

- A timely paper and excellent report

- Good example of an important problem of practice

- High potential for impact

- Meaningful and insightful

- Well-developed

- Important examples of a problem of practice

- Good interdisciplinary foundation

Discussion

A characteristic of all submitted DiPs was addressing immediate needs in practice. Some were assessments of existing programs; others delved into theoretical constructs and inquired about their applicability to educational issues within the local, regional, or national context. Among these studies, a few took their inquiry directly into the classroom. While the DiPs that rose to the top during the review process were regarded by their submitting institutions as exemplary, not all addressed all of the assessment criteria in their synopsis.

Critical assessment of the DiPs indicated that most CPED member institutions remain unclear about what constitutes an exemplary DiP. While the conclusions drawn from the 2009 Peabody convening asserted that all share a set of common characteristics related to rigorous analysis in a realistic operational setting (Smrekar & McGraner, 2009), the DiP Award Committee’s analysis of 25 submissions revealed a continuum of alignment to the Working Principles for Professional Practice Programs.

Discrepancy in alignment to the Working Principles may be indicative of an analogous disconnect between the central principles that were developed by the consortium to guide all programs in 2009 and what is, in reality, being practiced currently among Phase I and II CPED institutions. The assumption that these principles would be tested during Phase II seems to be flawed, borne out by the analysis of the 2013 data. Alternately, the discrepancy in alignment to the Working Principles may also reflect the need for additional refinement and discussion around the rubric used for review by the DiP Award Committee. Again, because the rubric evolved from a community-based process, further refinements may require similar processes of discussion and recommendation from the broader constituency.

Many of the DiP submissions lacked clear evidence of impact on practice, a characteristic that is foundational to the Working Principles. While submissions demonstrate the author’s ability to generate solutions, whether a complex problem of practice had been identified in the studies was unclear in a majority of the submissions. Additionally, it was unclear in most submissions whether the author included implications for generative solutions at the local and/or broad context. Drawing on the work of Bryk, Gomez, and Grunow (2010), the six Core Principles of Improvement Science suggest the following:

- Make the work problem-specific and user-centered.

- Variation in performance is the core problem to address.

- See the system that produces the current outcomes.

- We cannot improve at scale what we cannot measure.

- Anchor practice improvement in disciplined inquiry.

- Accelerate improvements through networked communities.

Concluding Remarks

The analysis of DiPs and the narrative presented is indicative of both the challenges institutions face and their pervasiveness, as faculty wrestle with the design of a professional practice doctorate program. While challenging, the identification of common issues provides an opportunity for institutions to engage in conversation with others that appear to have found solutions to some of the challenges. Such conversation is a start to ensuring program rigor and consistency at both a national and international level. Learning in situ develops praxis in education. At the core, the creation of generative knowledge forms a substantive epistemology that guides the construction of meaning and builds confidence in decision makers.

To re-imagine and redesign the EdD will require innovation, a commitment that has now been made by the growing membership of CPED, now collaborating on a global stage to rethink the fundamental purpose of doctoral education with specific focus on the professional practice doctorate, the EdD.

References

Andrews, R., & Grogan, M. (2005). Form should follow function: Removing the EdD. dissertation from Ph.D. straightjacket. UCEA Review, 46(2), 10–13.

Archbald, D. (2008). Research versus problem solving for the Education Leadership doctoral thesis: Implications for form and function. Educational Administration Quarterly, 44(5), 704–739.

Bryk A. S., Gomez L. M. & Grunow A. (2010). Getting ideas into action: Building networked improvement communities in education. Stanford, CA: Carnegie Foundation for the Advancement of Teaching.

Caboni, T., & Proper, E. (2009). Re-envisioning the professional doctorate for educational leadership and higher educational leadership: Vanderbilt University’s Peabody College EdD. program. Peabody Journal of Education, 84(1), 61–68.

Carnegie Project on the Education Doctorate. (2012). Consortium members. Retrieved from http://cpedinitiative.org/consortium-members

Carnegie Project on the Education Doctorate. (2013). Dissertation in Practice of the Year Award. Retrieved from http://cpedinitiative.org/dissertation-practice-year-award

Council of Australian Deans and Directors of Graduate Studies. (2007). Guidelines on professional doctorates. Adelaide, Australia: Council of Australian Deans and Directors of Graduate Studies. Retrieved from https://www.gs.unsw.edu.au/policy/findapolicy/abapproved/policydocuments/05_07_professional_doctorates_guidelines.pdf

Council of Graduate Schools. (2008). Task force report of the professional doctorate. Washington, DC: Author.

European University Association. (2005). Salzburg Principles, as set out in the European Universities’ Association’s (EUA) Bologna Seminar report. Retrieved from

http://www.eua.be/eua/jsp/en/upload/Salzburg_Conclusions.1108990538850.pdf

European University Association. (2005). Salzburg principles, as set out in the European Universities’ Association’s (EUA) Bologna seminar report. Retrieved from

http://www.eua.be/eua/jsp/en/upload/Salzburg_Conclusions.1108990538850.pdf

Evans, R. (2007). Comments on Shulman, Golde, Bueschel, and Garabedian: Existing practice is not the template. Educational Researcher, 36(6), 553–559. Doi:

10.3102/0013189X07313149 in S.I.

Fulton, J., Kuit, J., Sanders, G., & Smith, P. (2013). The professional doctorate: A practical guide. New York, NY: Palgrave Macmillan.

Guthrie, J. (2009). The case for a modern Doctor of Education Degree (EdD.): Multipurpose education doctorates no longer appropriate. Peabody Journal of Education, 84(1), 2–7.

Hamilton, P., Johnson, R., & Poudrier, C. (2010). Measuring educational quality by appraising theses and dissertations: Pitfalls and remedies. Teaching in Higher Education, 15(5), 567–577.

Harris, S. L. (2011). Reflections on the first 2 years of a doctoral program in educational leadership. In S. Harris (Ed.). The NCPEA handbook on doctoral programs in educational leadership: Issues and challenges. Retrieved from Open Educational Resources Commons website: http://www.oercommons.org/courses/reflections-on-the-first-2-years-of-a-doctoral-program-in-educational-leadership.

Levine, A. (2005). Educating school leaders. Washington, DC: The Education Schools Project. Retrieved from www.edschools.org.

Lewin, K. (1944). The solution of a chronic conflict in industry. Proceedings of the Second Brief Psychotherapy Council. Reprinted in B. Cooke & J. F. Cox (Eds.), Fundamentals of action research, volume I, pp. 3–17. London: Sage.

Murphy, J., & Vriesenga, M. (2005). Developing professionally anchored dissertations: Lessons from innovative programs. School Leadership Reviews, 1(1), 33–57.

National Science Foundation. (2011). Numbers of doctorates awarded in the United States. Retrieved from http://www.nsf.gov/statistics/infbrief/nsf12303/

Osguthorpe, R. T., & Wong, M. J. (1993). The PhD versus the EdD: Time for a decision. Innovative Higher Education, 18(1), 47–63.

O’Mullane, M. (2005). Demonstrating significance of contribution to professional knowledge and practice in Australian professional doctorate programs: Impacts in the workplace and professions. In T.W. Maxwell, C. Hickey, & T. Evans (Eds), Working doctorates: The impact of professional doctorates in the workplace and professions. Geelong, Victoria, Australia: Deakin University.

Perry, J. A., & Imig, D. G. (2008, November/December). A stewardship of practice in education. Change, 42–48.

Perry, J. A., & Imig, D. G. (2010, May). Interrogation of outcomes of the Carnegie Project on the Education Doctorate. Paper presented at the annual meeting of the American Educational Research Association, Denver, CO.

Quality Assurance Agency. (2012). UK quality code for higher education. Part B: Assuring and enhancing academic quality, Chapter B11: Research degrees. Retrieved from http://www.qaa.ac.uk/Publications/InformationAndGuidance/Documents/Quality-Code-Chapter-B11.pdf

Rivera, N. (2013). Cooperative Learning in a community college setting: Developmental coursework in mathematics. Arizona State University, Tempe, AZ. Retrieved from CPED Dissertation in Practice Award Winner website: http://cpedinitiative.org/news-item/2013-dissertation-practice-year-award-winners-honorable-mentions-announced

Sands, D., Fulmer, C., Davis, A., Zion, S., Shanklin, N., Blunck, R. Ruiz-Primo, M. (2013). Critical Friends’ perspectives on problems of practice and inquiry in an EdD program. In V.A. Storey (Ed.), Redesigning professional education doctorates: Applications of critical friendship theory to the EdD (pp. 63–81). New York, NY: Palgrave Macmillan.

Shulman, L. S. (2005). Signature pedagogies in the professions. Daedalus, 134(3), 52–59. Doi: 10.1162/0011526054622015

Shulman, L. S. (2007). Practical wisdom in the service of professional practice. Educational Researcher, 36, 560–563. Doi: 10.3102/0013189X07313150

Shulman, L. S., Golde, C. M., Bueschel, A. C., & Garabedian, K. J. (2006). Reclaiming education’s doctorate: A critique and a proposal. Educational Researcher, 35(2), 25–32.

Smrekar, C., & McGraner, K. (2009). From curricular alignment to the culminating project: The Peabody College EdD capstone. Peabody Journal of Education, 84(1), 48–60.

Storey, V. A., & Hartwick, P. (2010). Critical friends: Supporting a small, private university face the challenges of crafting an innovative scholar-practitioner doctorate. In G. Jean-Marie & A. H. Normore (Eds.), Educational leadership preparation: Innovative and interdisciplinary approaches to the EdD and graduate education . New York, NY: Palgrave MacMillan.

Stringer, E.T. (2007). Action research (3rd ed.). London, UK: Sage Publication.

Walker, G. E., Golde, C. M., Jones, L., Bueschel, A. C., & Hutchings, P. (2008). The formation of scholars: Rethinking doctorial education for the twenty-first century. San Francisco, CA: Jossey Bass.

Zambo, D. (2011). Action research as signature pedagogy in an Education Doctorate program: The reality and hope. Innovative Higher Education. 36(4), DOI: 10.1007/s10755-010-9171-7.

This feature article was accepted for publication in the International HETL Review (IHR) after a double-blind peer review involving three independent members of the IHR Board of Reviewers and two revision cycles. Accepted for publication in July 2014 by Dr. Lorraine Stefani (University of Auckland, New Zealand), IHR Senior Editor.

Suggested citation:

Storey, V. A., Caskey, M. M., Hesbol, K. A., Marshall, J. E., Maughan, B., & Dolan, A. W. (2014). Examining EdD dissertations in practice: The Carnegie Project on the Education Doctorate. International HETL Review, Volume 5, Article 2. https://www.hetl.org/examining-edd-dissertations-in-practice-the-carnegie-project-on-the-education-doctorate

Copyright [2015] V. A. Storey, M. M. Caskey, K. A. Hesbol, J. E. Marshall, B. Maughan and A. W. Dolan.

The authors assert their right to be named as the sole authors of this article and to be granted copyright privileges related to the article without infringing on any third party’s rights including copyright. The authors assign to HETL Portal and to educational non-profit institutions a non-exclusive licence to use this article for personal use and in courses of instruction provided that the article is used in full and this copyright statement is reproduced. The authors also grant a non-exclusive licence to HETL Portal to publish this article in full on the World Wide Web (prime sites and mirrors) and in electronic and/or printed form within the HETL Review. Any other usage is prohibited without the express permission of the authors

Disclaimer

Opinions expressed in this article are those of the author, and as such do not necessarily represent the position of other professionals or any institution. By publishing this article, the author affirms that any original research involving human participants conducted by the author and described in the article was carried out in accordance with all relevant and appropriate ethical guidelines, policies and regulations concerning human research subjects and that where applicable a formal ethical approval was obtained.